Challenges for LEO HTS Megaconstelllations: Terrestrial Networks Integration

|

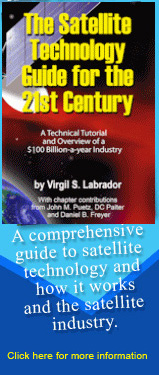

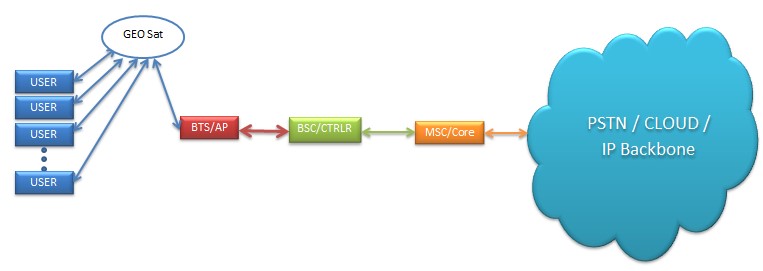

| Network Architecture for a Typical MNO/ISP Network |

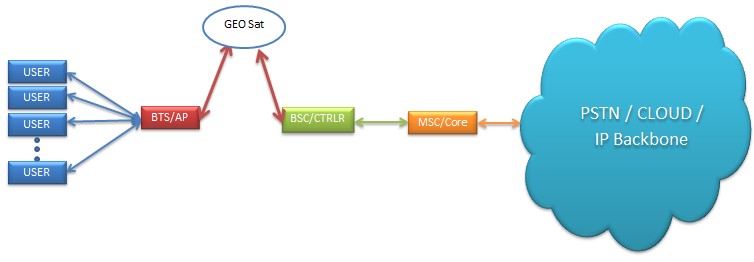

Satellite communication has been serving the terrestrial network as a complementor rather than a competitor for a considerable time. The best use-case scenario is the cellular backhaul over VSAT (Very Small Aperture Terminal) to connect remotely installed BTS (Base Transceiver Station) of a cellular network through a geostationary satellite to the respective BSC (Base Station Controller) and ultimately the core network. This technology enabled MNOs (Mobile Network Operators) to increase their subscribers base in remote communities which could not be connected to their network grid through Microwave or Fibre transmission. Similar network architecture, commonly known as bent-pipe and FSS (Fixed Satellite Service), has been used by other networks requirements of ISP (Internet Service Providers), Government, Corporate, Oil & Gas, Mining sectors, where the DCE (Data Communication Equipment) and DTE (Data Terminal Equipment) are replaced from BTS and BSC to networking switches and routers.

|

| VSAT Network Architecture |

Furthermore, the backhaul portions are to be dimensioned in such a way that they do not create bottlenecks as well. Considering the maximum and average data rate of the radio interface, the core dimensioning is balanced in such a way that the core network is neither over-dimensioned (increased operational cost) nor under-dimensioned (loss of revenue and customer dissatisfaction). The infrastructure of 2G and 3G TDM (Time Division Multiplexing) backhauling still needs to be supported, therefore, in order to support both the lower data rate systems and the high data rates of LTE/LTE-A, it requires high scalability from the IP (Internet Protocol) core and backhaul networks. Preferably, backhauling is performed via fibre optics or microwave radio links between the base station and the radio controller whereas the traditional solution has been the circuit-switched TDM, nevertheless the transition to the all-IP concept in supporting stages of the core network is obvious.

|

| MSS Network Architecture |

The requirement of minimum received power levels for certain data rates leads to the adoption of different access methods in the uplink and downlink transmissions. The received power level is dependent on the presence of useful and interfering signals compared to the noise level; SINR (Signal to Interference Noise Ratio) values indicating the QoS level. The fast fading environments (dense urban areas) with a high number of multipath propagation components, makes OFDMA as the most suitable choice for LTE downlink transmission but the associated drawbacks such as PAPR along with non-optimal power efficiency compels the UE (User Equipment) to use energy efficient SC-FDMA. The combination of OFDMA and SC-FDMA provides coverage areas comparable to HSPA (High Speed Packet Access) networks but offering high data rates. The functionality of the handovers plays an important role to ensure the QoS in all cases where LTE/LTE-A coverage area is sufficiently good. The X2 interface between the eNodeB elements optimizes the success rate of the handovers in LTE as compared to the previous techniques. In a probable situation of service outage, the LTE connection is changed automatically to 2G/3G networks affecting the QoS level as the overall data throughput will be lower in this case. This fall-back procedure could seriously impact some of the applications involving real time data streaming but it wouldn’t have the same effect for VoIP (Voice over IP) connection. Nevertheless, from the user point of view, the continuum of the service is more important even with reduced throughput rather than complete breakdown of the service.

With around a decade of experience in the satellite communications industry, Muhammad Furqan is a renowned writer and analyst with multiple publications and keynote appearances at different international platforms. Currently based in Australia, he is working in research related to Radio Frequency Electromagnetic Spectrum for mobile and satellite communications at Queensland University of Technology. He can be reached at: info@muhammadfurqan.com

With around a decade of experience in the satellite communications industry, Muhammad Furqan is a renowned writer and analyst with multiple publications and keynote appearances at different international platforms. Currently based in Australia, he is working in research related to Radio Frequency Electromagnetic Spectrum for mobile and satellite communications at Queensland University of Technology. He can be reached at: info@muhammadfurqan.com Waheeb Butt received his B.S. degree in Electronics from IIU Pakistan in 2007and M.Sc. degree in Communication and signal processing from Newcastle University, UK in 2009. Since then, he has been involved in teaching and research activities related to the field of telecommunication. He is currently doing PhD at RWTH Aachen University Germany, in the area of wireless communications. He can be reached at:waheeb.butt@ice.rwth-aachen.de

Waheeb Butt received his B.S. degree in Electronics from IIU Pakistan in 2007and M.Sc. degree in Communication and signal processing from Newcastle University, UK in 2009. Since then, he has been involved in teaching and research activities related to the field of telecommunication. He is currently doing PhD at RWTH Aachen University Germany, in the area of wireless communications. He can be reached at:waheeb.butt@ice.rwth-aachen.de